Sign up to receive latest insights & updates in technology, AI & data analytics, data science, & innovations from Polestar Analytics.

Data modernization drives real value beyond cloud migration. It enables AI at scale, governance, cost efficiency, and sustained business impact.

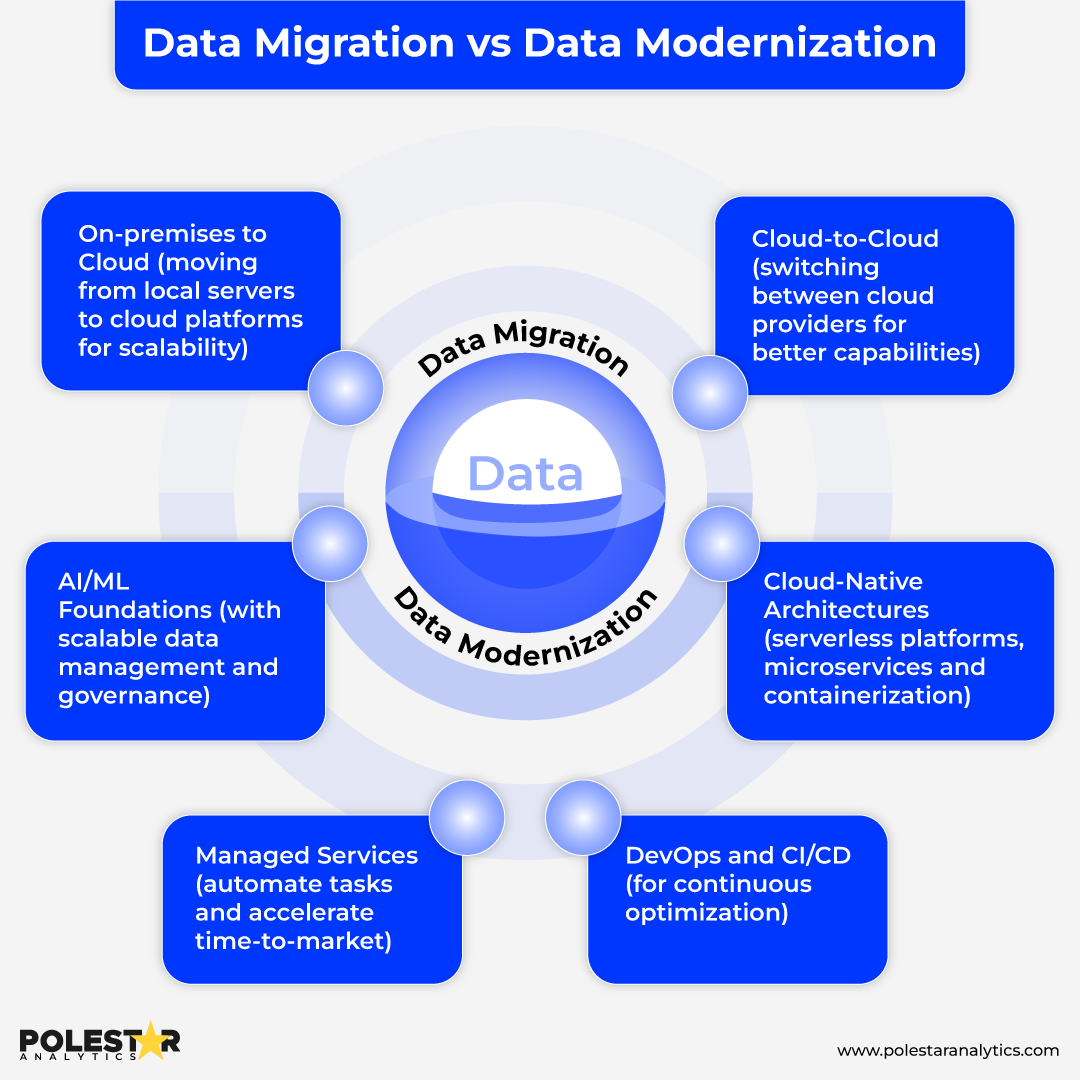

In our previous blog, "Migrate and Modernize Your Data Estate," we explored the fundamental differences between data migration and modernization, and why simply moving to the cloud isn't enough.

Experts like David LeGrand (Alliance Head, Polestar Analytics) suggests: the key lies in building a unified, intelligent data platform that can scale AI initiatives while maintaining governance and security.

~ 68% of institutions report they are currently in the process of data modernization and management.

So, if you haven't started your modernization journey yet, you risk falling behind competitors who are already leveraging these capabilities for competitive advantage!

Else, congratulations on completing your cloud migration! The infrastructure is in place and systems are running. But you've only crossed the starting line!

The global data architecture modernization market size estimated to grow up to USD 30.61 Billion by 2035 at an estimated CAGR of 12% from 2026 to 2035. Yet despite these significant investments, many organizations struggle because they treat modernization as a destination rather than a journey.

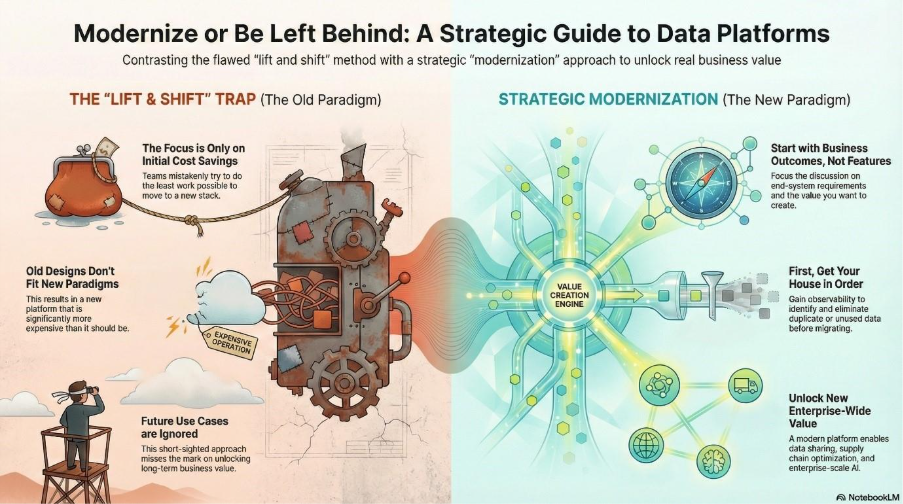

When people talk about modernization, they're almost always comparing it to a “lift-and-shift” alternative. That's where they miss the mark.

Cloud migration and initial modernization are important milestones, but they are far from endpoints in your data modernization strategy. The real transformation begins after migration, when organizations must rethink how they leverage their data assets for competitive advantage.

Before exploring how to implement your data modernization framework, let’s understand the difference between data migration and modernization.

The critical distinction between Data Migration and Data Modernization, is that migration addresses where your data lives; modernization transforms how it delivers.

65% of organizations plan to increase IT funding in 2025, with AI/ML and infrastructure modernization among the top focus areas.

Many organizations approach data modernization with a simple goal: move to the cloud, cut infrastructure costs, and declare victory. This common assumption, however, often leads to projects that run over budget and under-deliver on their promise. It turns out that true modernization is far more than a simple technology swap.

Organizations that successfully implement comprehensive data modernization programs see transformative results:

In our recent fireside chat, Data migration and modernization , Fred Abood, Lead Solutions Architect at Databricks, shared insights on industry best practices. Here are five takeaways that should shape your data modernization framework:

The "lift and shift" approach involves moving existing systems, architecture, and code to a new platform with different from those that power a modern Lakehouse, and ignoring this evolution is a recipe for failure.

The traditional approach to a major data initiative is to create a massive, enterprise-wide strategy from the top down. This often involves months of planning, cross-departmental committees, and complex roadmaps that can stall before the first line of code is migrated.

Fred advocates for inverting this model!

The most effective starting point is to identify a "digestible piece" of the business. Begin where you have strong relationships and a team that is willing to collaborate. This focus on securing quick, tangible wins builds momentum and demonstrates value early.

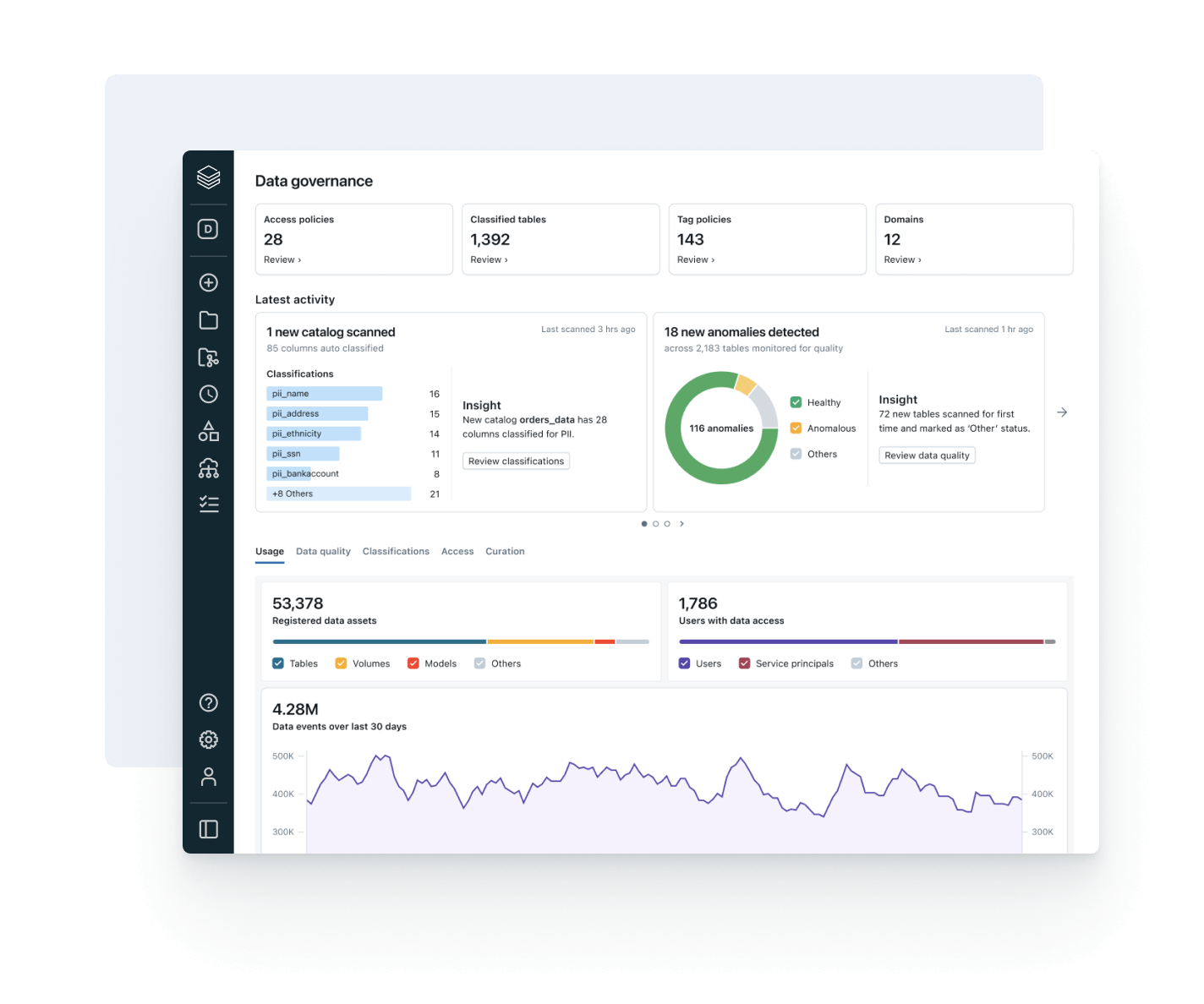

One of the most common pitfalls in modernization is rushing to move a data estate without a clear inventory of what it contains. Organizations often have no centralized awareness of what data assets exist, which are actively used, and which are redundant or obsolete.

Every enterprise in the survey had at least one modernization project fail, be delayed, or scaled back, representing on average $4M in wasted spending per failed IT modernization initiative.

According to Fred, the "paramount" first step is to implement observability and collect metadata before starting a migration. This process allows teams to identify duplicate, unused, or low-value assets that shouldn't be moved in the first place.

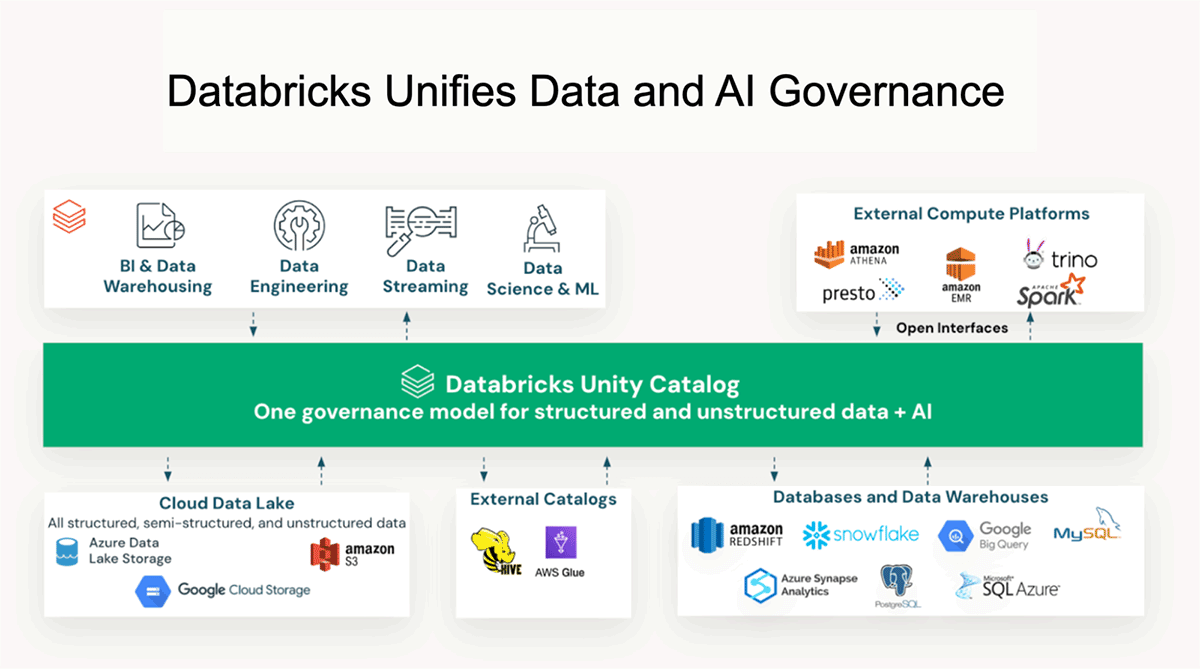

This is the exact problem Databricks' Unity Catalog is designed to solve.

Explore e-book "Modernise Before You're Left in the Dust" and learn how to transform your data estate into a competitive advantage!

62% of organizations identify lack of data governance as the primary challenge inhibiting AI initiatives, preventing scalable and trustworthy AI deployment without unified data estate control.

Nearly every enterprise wants to leverage AI to drive business value. The major hurdle, however, is the immense security and compliance risk of giving AI systems broad access to data that is siloed, ungoverned, and inconsistent.

The prerequisite for deploying AI safely and at scale is a "single pane of glass for your governance layer." A unified governance foundation provides the security controls and peace of mind necessary to unlock data for AI systems.

This foundational layer, by Unity Catalog, is what allows for the creation of enterprise-wide knowledge graphs, the "digital thread"- those powers intelligent applications.

When evaluating serverless compute, teams often make a simple mistake. They compare the per-hour cost of a serverless option to their existing self-managed compute clusters and conclude it's more expensive. This comparison misses the bigger picture.

Fred points out two factors:

You might be spending more per hour on compute but you're probably spending less on the same workload to run in the environment!

This requires a crucial mental shift from a resource-based cost model (price per hour) to an outcome-based cost model (total price per workload). Paying a higher hourly rate can lead to a lower total cost of ownership when the job gets done faster and more efficiently.

To validate progress and demonstrate ROI from your data modernization framework, track these critical metrics:

| Category | Key Metrics |

|---|---|

| Operational Excellence | Time-to-insight (query response times) |

| Data quality scores (accuracy, completeness, consistency) | |

| System availability & performance | |

| Infrastructure cost per workload | |

| Business Impact | Revenue from new data-driven capabilities |

| Operational cost savings (infrastructure, processes) | |

| Business process efficiency improvements | |

| User adoption & satisfaction (NPS/CSAT) | |

| Governance & Compliance | Data assets with documented lineage |

| Access provisioning time (grant/revoke) | |

| Compliance audit pass rate | |

| Security incidents & response times | |

| AI & Analytics Enablement | AI/ML models in production |

| Governed, accessible data (% of enterprise data) | |

| Advanced analytics adoption (predictive/prescriptive) | |

| Time to launch new data products |

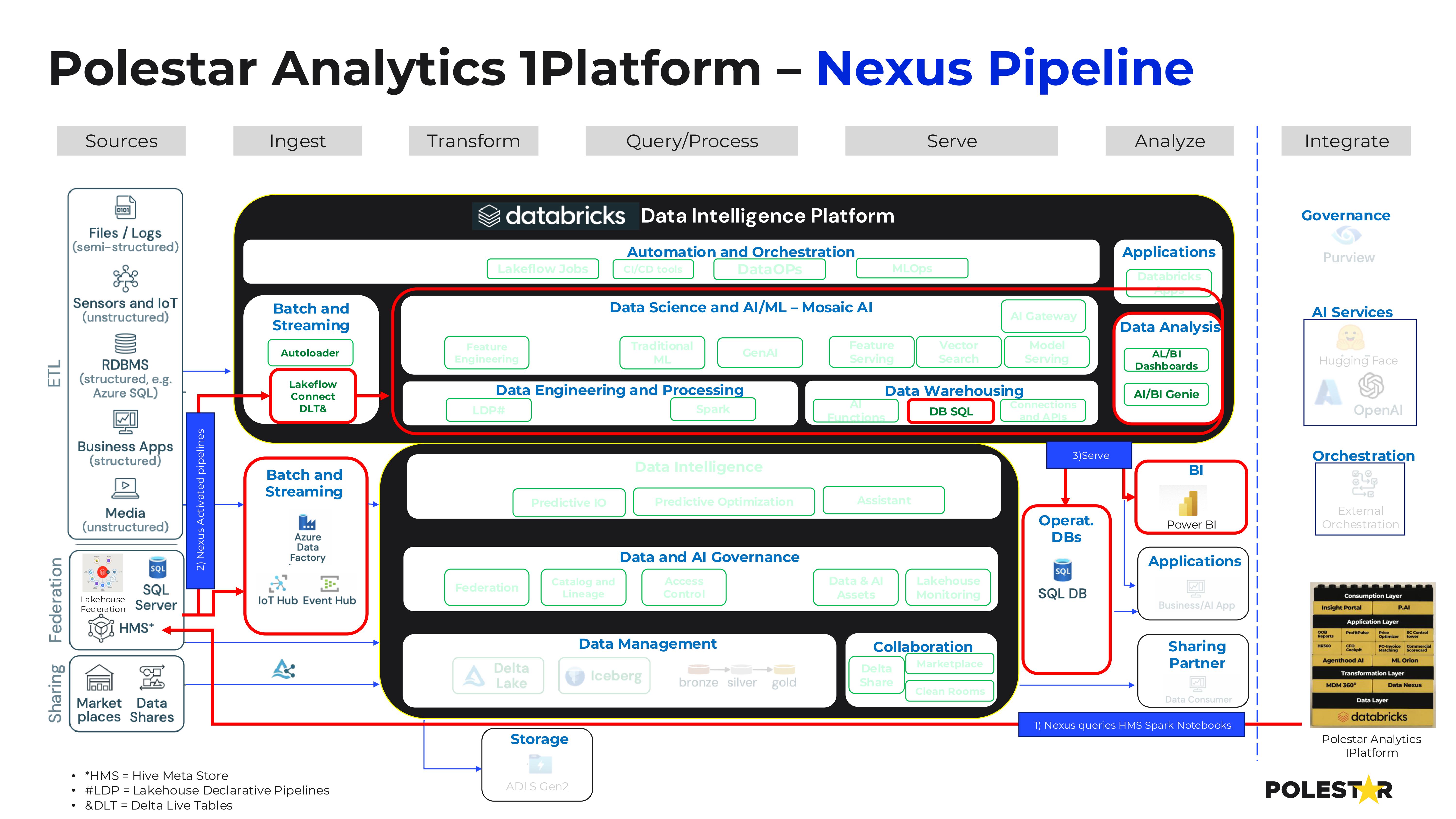

Data Nexus by Polestar Analytics is engineered specifically to address the data modernization challenges highlighted throughout this article. It's a data engineering tool that speeds delivery of composable data models- the foundation of all consumption layers including visualization, machine learning and Generative AI agents.

The common thread connecting these takeaways is that successful modernization is a paradigm shift in thinking, not just a technology swap. It requires a clear focus on business outcomes over legacy processes, a deep understanding of your current data state before you move, and a commitment to building a proper foundation for governance and performance. For the right partner for this journey- reach out to Polestar Analytics, Databricks implementation partners.

As you plan your next data initiative, ask yourself: are you simply moving old problems to a new platform, or are you truly building for the future?

About Author

Khaleesi of Data

Commanding chaos, one dataset at a time!